Artificial intelligence (AI) has become part of everyday life – from organizing our schedules, recommending shows, to helping with everyday work tasks.

The Rise of Artificial Intelligence

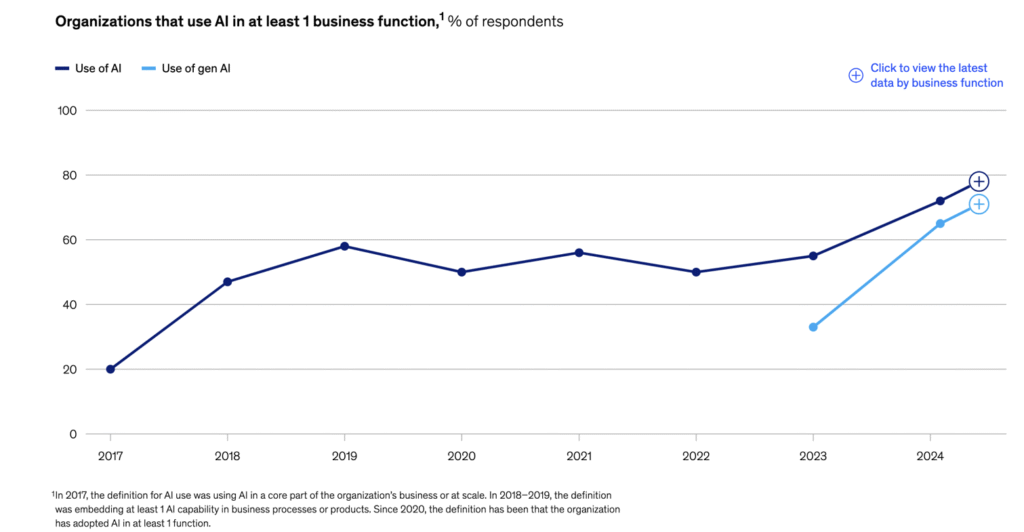

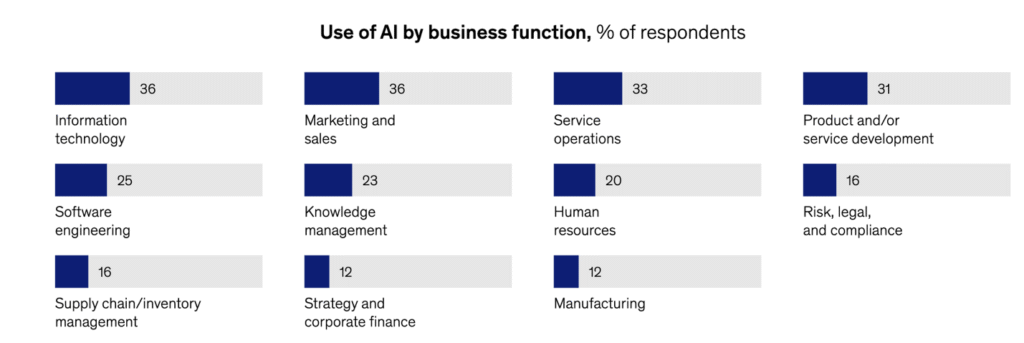

Over the past few years, AI has gone from a niche technology to a mainstream tool shaping nearly every industry (see Figure 1 and 2). From chatbots that answer customer questions to software that reviews legal documents in seconds, AI is changing how people work, communicate, and make decisions. (McKinsey 2024).

Source: McKinsey Global Surveys (2024)

Source: McKinsey Global Surveys (2024)

The steady rise in AI usage is reasonable: the technology has unlocked incredible efficiency – saving time, automating tasks, and making information more accessible than ever before. But with this progress comes new challenges: accuracy, accountability, and ethics. As AI becomes more integrated into our daily and professional lives, it’s essential to understand how it works – and why it sometimes gets things wrong.

There’s one question we should all ask – can AI actually tell the truth? The answer is more complicated than most people think.

How AI Is Built: From Data to Intelligence

At its core, AI isn’t magic – it’s math and data. Systems like ChatGPT or Google’s Gemini are powered by what’s called a Large Language Model (LLM). These models are trained using massive datasets – text from books, articles, court decisions, websites, and conversations from across the internet. During training, the AI doesn’t learn facts. Instead, it learns patterns. It studies how words and ideas typically appear together, predicting what comes next in a sentence. After billions of examples, it becomes remarkably skilled at sounding like a human – answering questions, summarizing documents, or even writing emails.

However, this ability doesn’t mean it understands the world. AI doesn’t “know” what’s true or false; it only recognizes what sounds plausible. That’s why it can produce something called a “hallucination” – a confident, polished, but entirely incorrect statement.

→ For example, when asked to cite a legal case, an AI might invent one that sounds authentic but doesn’t exist. This isn’t intentional dishonesty – it’s a limitation of how the system was trained.

Why This Matters: Truth vs. Technology

In everyday life, an AI error might just cause a laugh or an awkward email. But in the legal, business, and media worlds, false or misleading AI output can have serious consequences.

1. Defamation and Reputation

If an AI tool creates or spreads a false statement that harms someone’s reputation, it can lead to defamation claims. But determining who’s at fault – the user, the developer, or the platform – is legally complex and still evolving.

2. Misinformation and Advertising

Businesses using AI to create marketing content must ensure accuracy. If AI-generated claims are misleading, companies can face regulatory penalties or consumer lawsuits.

3. Compliance and Due Diligence

When AI tools are used in document review, contract drafting, or due diligence, errors can be costly. A missed clause or false summary could expose a company to financial or legal risks. (Harvard Law Review, 2024)

Who’s Responsible When AI Spreads False Information?

Right now, the responsibility typically falls on the user – the person or organization deploying the tool. Most AI platforms include terms stating that users are responsible for verifying information and ensuring compliance with laws. This is where legal awareness becomes essential. Companies using AI should establish clear internal policies outlining how these tools are used, who verifies their outputs, and when human review is required. Some governments are also stepping in. The EU’s AI Act, for example, introduces transparency and safety requirements for companies using or developing AI systems. Similar guidelines are emerging in the United States and Asia. The legal framework is still catching up – but it’s moving quickly. (Harvard Law Review, 2024)

How to Stay Smart (and Safe) When Using AI

1. Verify, verify, verify. Always fact-check AI-generated information, even if it sounds perfect.

2. Train your teams. Make sure employees understand both the potential and the limits of AI.

3. Disclose AI use when appropriate. Transparency builds trust with clients and consumers.

4. Include disclaimers. Especially when AI is used to generate public-facing content.

5. Consult legal counsel. If you’re unsure about compliance or liability, get professional advice before publishing or relying on AI outputs.

Final Thoughts

AI is not going away – it’s becoming a standard part of how we work, communicate, and make decisions. But while technology can make us faster, only humans can make us accurate, ethical, and fair. At its best, AI is a powerful assistant. At its worst, it can spread misinformation at lightning speed. The difference lies in how we use it – with awareness, integrity, and responsibility. As AI continues to evolve, so must the legal frameworks that govern it. The challenge ahead is ensuring that innovation moves forward without losing sight of truth and accountability – values that no algorithm can replace.

If you have any questions, feel free to contact Pierce & Kwok LLP for more information.

Sources

- McKinsey & Company. The State of AI in Early 2024: Gen AI Adoption Spikes and Starts to Generate Value. 2024. Mckinsey.

- Federal Trade Commission (FTC). AI and Consumer Protection: Guidance for Businesses. 2023. ftc.gov

- Brookings Institution. AI and the Spread of Misinformation: Understanding the Risks and Policy Responses. 2023. brookings.edu

- Harvard Law Review. Who’s Liable When AI Gets It Wrong? Emerging Legal Theories in the United States. 2024. harvardlawreview.org

Figure 1 and 2

- McKinsey & Company. The state of AI: How organizations are rewiring to capture value. 2024. Mckinsey.